Prompt Engineering Techniques with Spring AI

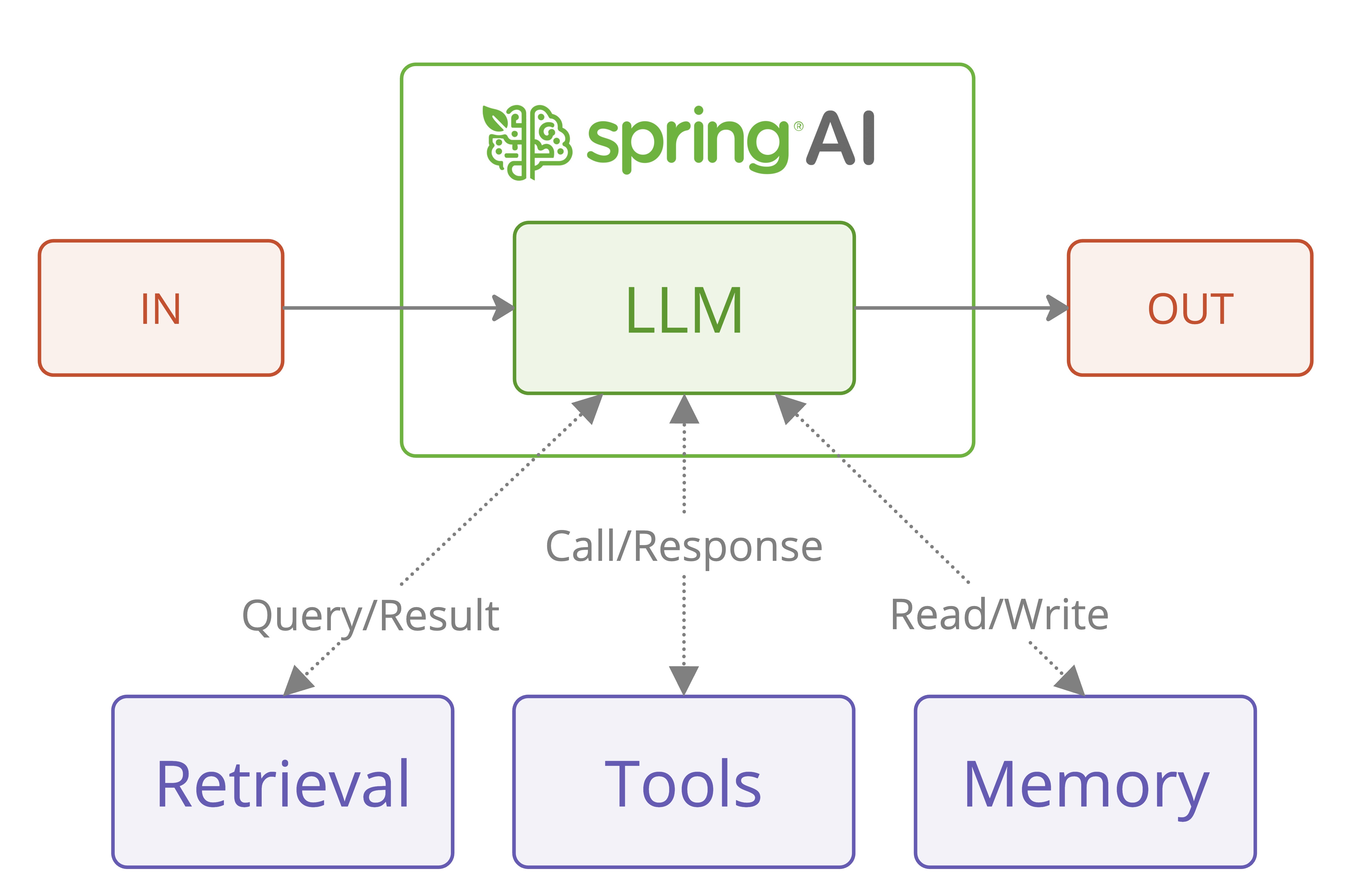

This blog post demonstrates practical implementations of Prompt Engineering techniques using Spring AI.

The examples and patterns in this article are based on the comprehensive Prompt Engineering Guide that covers the theory, principles, and patterns of effective prompt engineering.

The blog shows how to translate those concepts into working Java code using Spring AI's fluent ChatClient API.

For convenience, the examples are structured to follow the same patterns and techniques outlined in the original guide.

The demo source code used in this article is available at: https://github.com/spring…